Germany was great. The Max Planck Institute for Math is an incredible place to do research, from the wonderful staff to the good talks to the coffee machine on the main floor. My collaborator and I made a lot of progress and I learned a lot about the affine Grassmannian. Maybe pictures to follow.

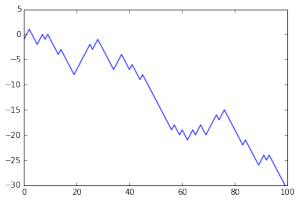

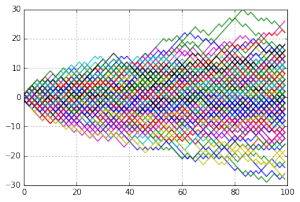

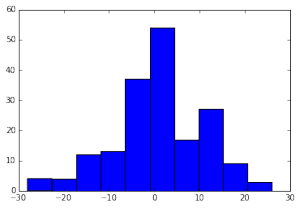

I had a good jetlag-free week in Germany and then came back to the US and got into the work rhythm again. Side projects: Am I ready for college math? Trying to figure out how to provide good resources to students to deal with the psychological side of math, since I see so many students drop out because of feelings rather than ability. I put up my first Youtube video on stereotype threat while in Germany. Just putting it together was interesting! The next set of videos will probably be shorter…. Work projects: I’m leading a seminar on CCAR and stress testing. CCAR stands for “Comprehensive Capital Analysis and Review,” and it’s a process in which banks have to justify to the Federal Reserve that they’ve got enough money to deal with their obligations should the economic situation get bad. It’s directly in response to the financial crisis of the late 2000s and there is a TON of math modeling involved.

Next week I’ll be helping with the math modeling workshop for high school students at the University of Minnesota, and my group will be working on nitrate runoff in southern Minnesota. Prepping for that has been interesting — it’s math and nature (so related to a lot of activities I’ve written up for Earthcalculus.com) but there are some definite financial aspects, too. Finance and risk management in agriculture are going to be a theme in the Actuarial Research Conference that MCFAM is organizing at the University of Minnesota and St Thomas this summer!

Also trying to keep up with the weeds in the back yard…. things grow so fast!!! Our corn is showing tassels already (new fertilizer regimen) and we’ve got strawberries galore…. The hops are growing like crazy and we’ll have a good crop this year.